Welcome to EleutherAI

A grassroots collection of researchers working to open source AI research.

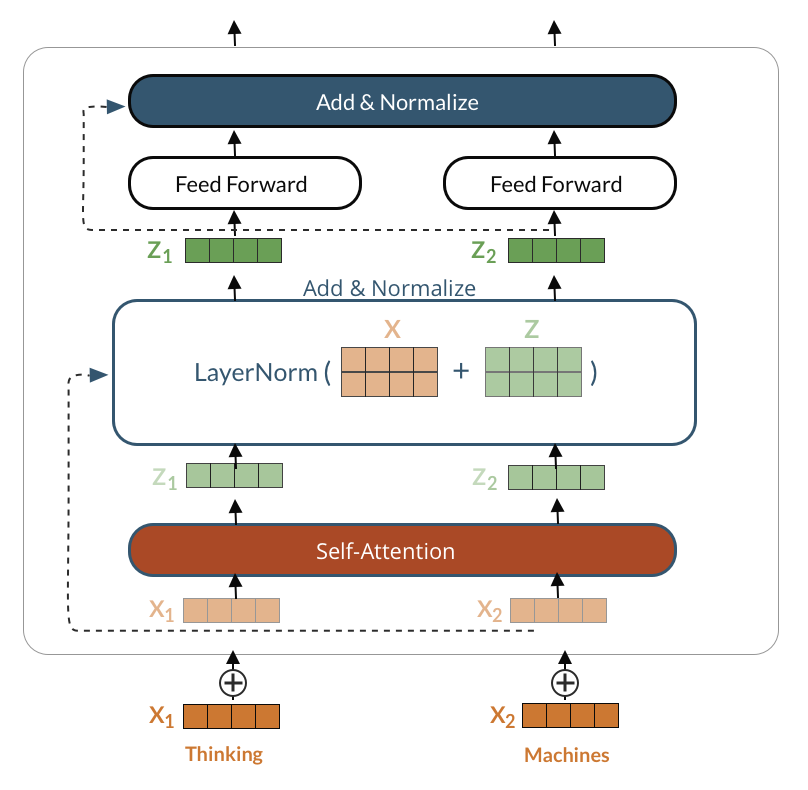

GPT-Neo

GPT-Neo is the name of our codebase for transformer-based language models loosely styled around the GPT architecture. One of our goals is to use GPT-Neo to replicate a GPT-3 sized model and open source it to the public, for free. Along the way we will be running experiments with alternative architectures and attention types, releasing any intermediate models, and writing up any findings on our blog. Our models are built in Mesh TensorFlow, which will allow us to scale up to GPT-3 sizes and beyond using simultaneous model and data parallelism.

The Pile

The Pile is an 825 GiB diverse, open source language modelling dataset consisting of data from 22 high quality sources. It is useful for both training and benchmarking large language models.

In our evaluations, models trained on the Pile show moderate improvements in traditional language modeling benchmarks, along with significant improvements on Pile BPB (our benchmarking measure).

The Pile is now complete! Check it out here.

OpenWebText2

OpenWebText2 is a dataset inspired by WebText, created by scraping URLs extracted from Reddit submissions up until April 2020 with a minimum score of 3 as a proxy for quality.

It features content from multiple languages, document metadata, multiple dataset versions, and open source replication code.

OpenWebText2 is complete! Check it out here.

Announcements

🔔🔔 Pile v1 Release 🔔🔔

Current Projects

(Last updated January 18, 2021)

- GPT-Neo: TPU Code, now working on scaling our GPU code to run 200B+ models.

- Multi-Modal Transformers: Data collection underway. We are especially interested in text-speech and text-image parallel corpuses.

- Radioactive Lab: Experiments begun. Reworking after feedback from authors.

- Scaling Laws: Just getting started.

Completed Projects

- Pile: Released, with paper preprint available.

- OpenWebText2: Released.